So I just ran my second paid online survey through Amazon Mechanical Turk. Despite my internet searches of how to do this right and treat participants fairly (there is discussion about whether these are “workers” or research participants and how much compensation is fair in those cases – this article discusses the worker side, primarily), I still managed to receive a flurry of emails (ok, from maybe 30 people out of over 1000 participants) who had problems.

Some background: before launching, I used this site to help set up the survey correctly, which offered advice similar to this site. I also opted against using “attention filter” questions based on this article.

Here’s more about what I learned this time through:

Make it clear this is a research study, not a “work” project, and that you are compensating as such (you do have IRB approval, right?? make it clear in your HIT/task that this is the case). Some people argue that research participants should be compensated at the same rate as minimum wage – currently, that’s $7.25 an hour federally and $15 for a living wage recommendation. If you’re not asking for extensively skilled expertise or high risk, that seems to be the best in terms of justice (again, your IRB can help you determine this). For a 5-minute survey, that means 60 cents; I’ll admit because this was an especially low-expertise and low-risk task, I offered 50 cents, or $6 per hour. I’ll leave aside the issue of taxes and reporting; as far as I know, anyone completing tasks on MTurk is on their own for reporting income from the service. On the flip side, I collected data from in-person volunteers in Gainesville and offered no compensation.

Screener questions should be best treated as a separate, perhaps compensated, perhaps not, HIT. You can still make it unclear what the conditions you desire are, so as not to bias participants. At the very least, clearly specify this in your instructions and end of survey messages if you do not make it a separate task.

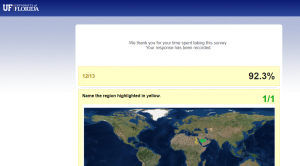

If you are having Turkers enter a code when they have completed the survey, sometimes that code doesn’t work (for me, that’s what the 30 emails were about – only about 3 were screen-fails). Ask Turkers to take a screenshot of the last page (or save it as a pdf/print screen) and send it to you in that case rather than entering their worker ID – the worker ID is protected information and doesn’t really help you figure out what’s going wrong. For me, the 30 people somehow got kicked out of the survey early – out of 18 questions, some only saw 16, one saw as few as 5. I have to go back to the survey software company to figure out what happened, but the screenshots prove these weren’t people who screen-failed.

I posted my survey at about 9:30 am and had 1000 responses by 11am.

I gratefully acknowledge all my participants, and especially those who took the time to write and help me figure out what wasn’t working so I can do it better next time.

0

0